Science

States Move to Regulate AI Therapy Apps Amid Rising Concerns

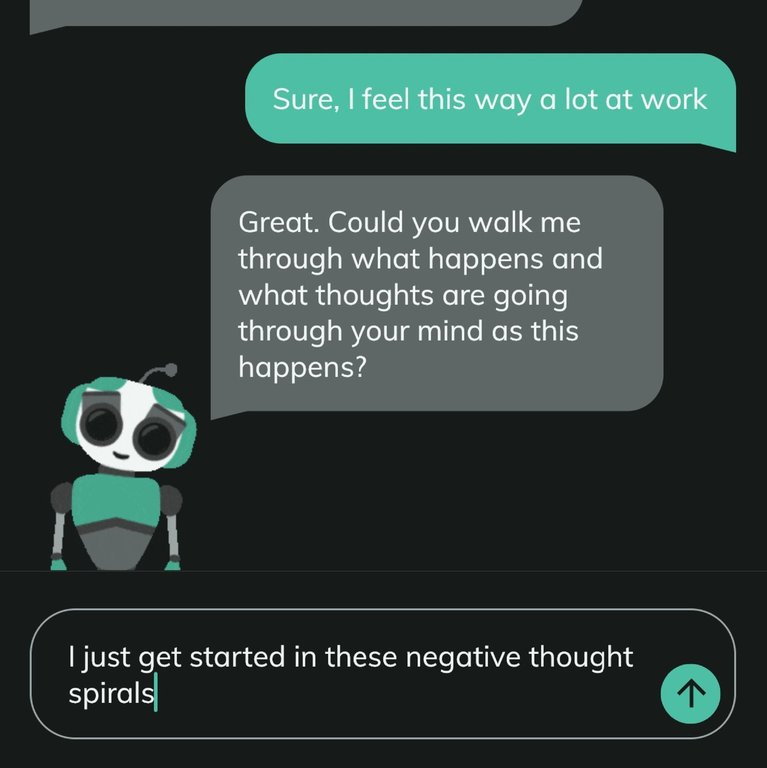

The rapid proliferation of artificial intelligence (AI) therapy applications has prompted several U.S. states to implement regulations aimed at safeguarding users. In response to a noticeable shift towards AI for mental health support, states such as **Illinois**, **Nevada**, and **Utah** have begun enacting laws to address the potential risks associated with these technologies. As of now, these regulations are seen as a necessary, albeit insufficient, step in a landscape that is evolving at an unprecedented pace.

Karin Andrea Stephan, CEO and co-founder of the mental health chatbot app **Earkick**, highlighted the urgency of the situation, stating, “The reality is millions of people are using these tools and they’re not going back.” This sentiment reflects a growing reliance on AI for mental health advice, especially amid a nationwide shortage of mental health professionals.

State Regulations and Their Limitations

The recently enacted state laws vary significantly in their approach. For instance, **Illinois** and **Nevada** have taken a strict stance by banning AI applications that claim to provide mental health treatment. Violations can result in hefty fines, with penalties reaching up to **$10,000** in Illinois and **$15,000** in Nevada. Meanwhile, **Utah** has imposed restrictions on therapy chatbots, mandating that they protect users’ health information and clearly disclose their non-human status.

This patchwork of regulations has raised concerns among mental health advocates and app developers alike. Many argue that the laws do not adequately cover generic chatbots, such as **ChatGPT**, which, while not explicitly marketed for therapy, are frequently utilized by users seeking mental health support. Instances have been reported where users experienced severe consequences, including loss of reality or suicidal thoughts after engaging with these platforms.

Vaile Wright, who oversees health care innovation at the **American Psychological Association**, acknowledged the potential benefits of well-designed AI apps. She stated, “Mental health chatbots that are rooted in science, created with expert input and monitored by humans could change the landscape.” However, Wright emphasized the need for comprehensive federal regulations to ensure that these technologies serve their intended purpose without harming users.

Federal Oversight in the AI Therapy Space

In light of these developments, the **Federal Trade Commission (FTC)** recently announced inquiries into seven AI chatbot companies, including major players like **Google**, **Meta** (parent company of Facebook and Instagram), and **Snapchat**. The focus of these inquiries is to evaluate how these companies assess and mitigate potential negative impacts of their technologies on young users.

Additionally, the **Food and Drug Administration (FDA)** is set to convene an advisory committee on **November 6, 2023**, to discuss generative AI-enabled mental health devices. Proposed measures could encompass restrictions on marketing practices, requirements for user disclosures, and the establishment of legal protections for individuals reporting unethical practices.

Despite the growing interest in AI therapy, experts caution that today’s chatbots often fail to meet the emotional and ethical standards provided by human therapists. In March, a team from **Dartmouth University** conducted a randomized clinical trial of a generative AI chatbot called **Therabot**, designed to assist individuals diagnosed with anxiety, depression, or eating disorders. The study found that users reported symptom relief similar to that achieved through traditional therapy. However, researchers noted that further studies are necessary to establish the effectiveness of such technologies on a larger scale.

As the landscape of AI therapy applications continues to evolve, the challenge remains for regulators to keep pace with innovation while ensuring user safety. Advocates like **Kyle Hillman**, who lobbied for regulations in Illinois and Nevada, emphasized that while these apps can provide a degree of support, they cannot replace the nuanced care offered by trained professionals. He remarked, “Not everybody who’s feeling sad needs a therapist, but for people with real mental health issues or suicidal thoughts, providing a bot instead of a qualified professional is a privileged position.”

The ongoing dialogue around AI therapy apps underscores the urgent need for a balanced regulatory framework that prioritizes user safety while fostering innovation in mental health care solutions. As more individuals turn to these technologies for support, the responsibility to regulate effectively will only grow.

-

Science2 months ago

Science2 months agoToyoake City Proposes Daily Two-Hour Smartphone Use Limit

-

Health2 months ago

Health2 months agoB.C. Review Reveals Urgent Need for Rare-Disease Drug Reforms

-

Top Stories2 months ago

Top Stories2 months agoPedestrian Fatally Injured in Esquimalt Collision on August 14

-

Technology2 months ago

Technology2 months agoDark Adventure Game “Bye Sweet Carole” Set for October Release

-

World2 months ago

World2 months agoJimmy Lai’s Defense Challenges Charges Under National Security Law

-

Technology2 months ago

Technology2 months agoKonami Revives Iconic Metal Gear Solid Delta Ahead of Release

-

Technology2 months ago

Technology2 months agoSnapmaker U1 Color 3D Printer Redefines Speed and Sustainability

-

Technology2 months ago

Technology2 months agoAION Folding Knife: Redefining EDC Design with Premium Materials

-

Technology2 months ago

Technology2 months agoSolve Today’s Wordle Challenge: Hints and Answer for August 19

-

Business2 months ago

Business2 months agoGordon Murray Automotive Unveils S1 LM and Le Mans GTR at Monterey

-

Lifestyle2 months ago

Lifestyle2 months agoVictoria’s Pop-Up Shop Shines Light on B.C.’s Wolf Cull

-

Technology2 months ago

Technology2 months agoApple Expands Self-Service Repair Program to Canada