Science

States Race to Regulate Fast-Growing AI Therapy Apps Amid Concerns

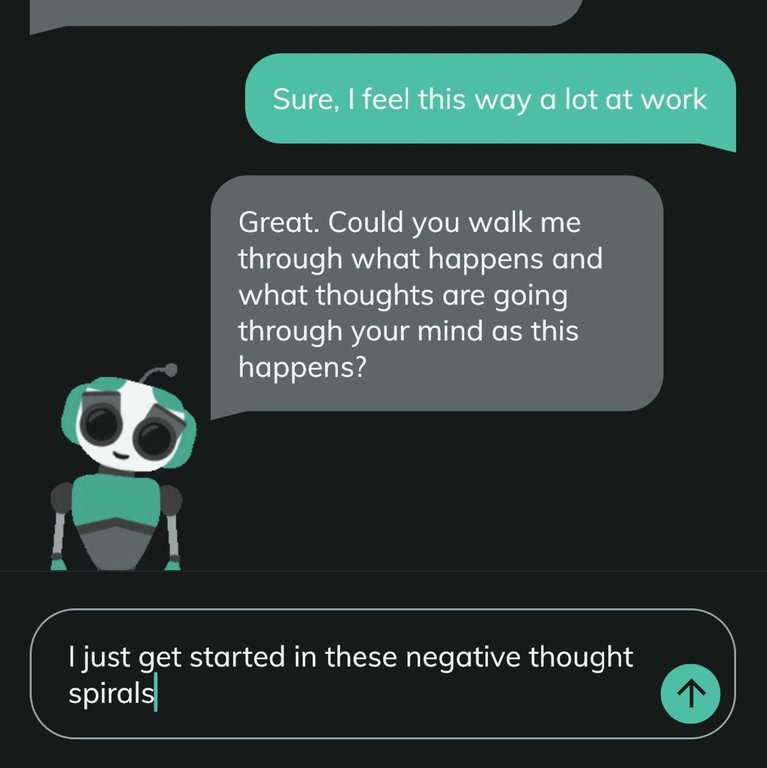

Regulators are grappling with the rapid evolution of artificial intelligence (AI) therapy applications as more individuals seek mental health support through these digital platforms. In response to this growing trend, several U.S. states have initiated regulations targeting AI therapy apps, although experts warn that these measures may not sufficiently protect users or hold app developers accountable.

California, Illinois, Nevada, Utah, Pennsylvania, and New Jersey have enacted various laws this year aimed at overseeing AI-driven mental health services. These regulations come amid increasing public reliance on AI for mental health advice, particularly in light of the ongoing shortage of qualified mental health professionals. Yet the patchwork of state laws lacks a cohesive framework, leaving many critical questions unanswered.

Karin Andrea Stephan, CEO and co-founder of the mental health chatbot app Earkick, emphasized that millions of people are already utilizing these tools, and they are unlikely to revert to traditional methods. “The reality is millions of people are using these tools and they’re not going back,” she stated.

State Regulations Vary Significantly

The state laws exhibit a range of approaches. For instance, Illinois and Nevada have outright banned the use of AI for mental health treatment, with penalties of up to $10,000 in Illinois and $15,000 in Nevada for violations. On the other hand, Utah has implemented regulations that require therapy chatbots to safeguard user health information and clarify their non-human status.

As these laws continue to evolve, their impact on users varies widely. Some applications have restricted access in states with bans, while others have chosen to maintain their services pending further legal clarifications. Notably, many of these regulations do not extend to general chatbots like ChatGPT, which, despite not being marketed for therapeutic purposes, are frequently used by individuals seeking mental health support.

The implications of poorly regulated AI therapy apps have already become evident. Instances have emerged where users suffered severe consequences, including losing touch with reality or even taking their own lives after interactions with these platforms.

Vaile Wright, who oversees health care innovation at the American Psychological Association, acknowledged the potential benefits of AI apps in addressing the mental health care gap. She noted that responsibly designed mental health chatbots, developed with expert input and human oversight, could provide essential support before crises arise. Wright insists that federal regulation is necessary to ensure safety and efficacy in this rapidly changing landscape.

Federal Oversight on the Horizon

Recognizing the need for stronger oversight, the Federal Trade Commission (FTC) recently announced inquiries into seven AI chatbot companies, including those owned by Meta (Facebook and Instagram), Google, OpenAI (ChatGPT), and others. These inquiries aim to assess how these companies monitor the potential negative impacts of their technology on children and adolescents.

Additionally, the Food and Drug Administration is set to convene an advisory committee on November 6, 2023, to evaluate generative AI-enabled mental health devices. Potential federal actions could involve regulating marketing practices, limiting addictive features, requiring clear disclosures about non-medical status, and providing legal protections for users reporting harmful practices.

The diverse applications of AI in mental health care, ranging from “companion apps” to self-described “AI therapists,” complicate efforts to establish comprehensive regulations. Some states have targeted companion apps that focus solely on social interactions without addressing mental health care. Yet, the laws in Illinois and Nevada are more stringent, banning products claiming to offer mental health treatment altogether.

Stephan from Earkick shared her concerns regarding the ambiguity of regulations, particularly in Illinois, where the law’s definitions remain unclear. Her team initially refrained from labeling their chatbot as a therapist but later adapted to user terminology to enhance visibility in searches. Recently, the app’s description has shifted from being labeled as a “therapist” to a “chatbot for self-care,” emphasizing that it is not intended for diagnosing or treating mental health issues.

While the app offers features like a “panic button” to contact a trusted individual in crises, Stephan clarified that it was never designed as a suicide prevention tool. Concerns about how states can keep pace with technological advancements persist, with Stephan expressing reservations about the ability of current regulations to adapt to the rapid evolution of AI.

Despite some apps blocking access in states with bans, others argue that these measures could hinder the development of responsible tools. The AI therapy app Ash, for instance, has encouraged users in Illinois to advocate for legislative changes, claiming that existing regulations leave unregulated chatbots free to cause harm.

Mario Treto Jr., Secretary of the Illinois Department of Financial and Professional Regulation, affirmed the state’s objective of ensuring that licensed therapists are the sole providers of mental health treatment. “Therapy is more than just word exchanges,” Treto stated. “It requires empathy, clinical judgment, and ethical responsibility, none of which AI can truly replicate right now.”

In March 2023, a research team at Dartmouth University conducted the first known randomized clinical trial of a generative AI chatbot designed for mental health treatment, called Therabot. The study focused on individuals diagnosed with anxiety, depression, or eating disorders, finding that users rated Therabot similarly to human therapists and reported significant symptom reductions after eight weeks of use. Importantly, all interactions were monitored by human professionals who intervened when necessary.

Clinical psychologist Nicholas Jacobson, who leads the research on Therabot, highlighted the need for caution as the field develops. He noted that while the study showed promising results, further investigation is essential to validate the app’s efficacy on a larger scale.

The current landscape of AI therapy apps raises critical questions about user safety and effectiveness. Many applications are designed to engage users by affirming their thoughts, which can blur the lines between companionship and therapy. As both regulators and developers navigate these challenges, the need for effective oversight and the development of responsible AI solutions remains paramount.

Regulators and advocates for mental health policy are open to adapting existing measures, acknowledging that today’s AI therapy apps do not serve as comprehensive solutions to the ongoing shortage of mental health providers. As Kyle Hillman, a lobbyist for the bills in Illinois and Nevada, pointed out, “Not everybody who’s feeling sad needs a therapist,” but the implications of relying on AI for those in genuine need of support remain a contentious topic.

-

Science2 months ago

Science2 months agoToyoake City Proposes Daily Two-Hour Smartphone Use Limit

-

Health2 months ago

Health2 months agoB.C. Review Reveals Urgent Need for Rare-Disease Drug Reforms

-

Top Stories2 months ago

Top Stories2 months agoPedestrian Fatally Injured in Esquimalt Collision on August 14

-

Technology2 months ago

Technology2 months agoDark Adventure Game “Bye Sweet Carole” Set for October Release

-

World2 months ago

World2 months agoJimmy Lai’s Defense Challenges Charges Under National Security Law

-

Technology2 months ago

Technology2 months agoKonami Revives Iconic Metal Gear Solid Delta Ahead of Release

-

Technology2 months ago

Technology2 months agoSnapmaker U1 Color 3D Printer Redefines Speed and Sustainability

-

Technology2 months ago

Technology2 months agoAION Folding Knife: Redefining EDC Design with Premium Materials

-

Technology2 months ago

Technology2 months agoSolve Today’s Wordle Challenge: Hints and Answer for August 19

-

Business2 months ago

Business2 months agoGordon Murray Automotive Unveils S1 LM and Le Mans GTR at Monterey

-

Lifestyle2 months ago

Lifestyle2 months agoVictoria’s Pop-Up Shop Shines Light on B.C.’s Wolf Cull

-

Technology2 months ago

Technology2 months agoApple Expands Self-Service Repair Program to Canada